Introduction to Language and Learning Models (LLM):

In recent times, there is a trend to build powerful language models due to availability of large datasets and advancement in hardware capabilities. To be more specific, Language and Learning Models (LLM) are considered as a powerful tool in natural language processing (NLP) that can be used for various tasks such as text classification, sentiment analysis, question answering, and much more. They are designed to understand and generate human-like text based on the input they receive. When compared to the language models which was used in the past, we need more computational resources and training data because LLM mostly have billions of parameters.

Terms:

LLM: It stands for Large language models with billions of parameters which helps in excel at a large range of natural language processing tasks. LLM use cases for text-based content and it can be distributed in the following kind:

- Generation (e.g., marketing content creation)

- Summarization (e.g., legal paraphrasing)

- Translation (e.g., between languages, text-to-code)

- Classification (e.g., toxicity classification, sentiment analysis)

- Chatbot (e.g., open-domain Q+A)

Tokens: The LLM context size, or the maximum number of tokens the model can process.

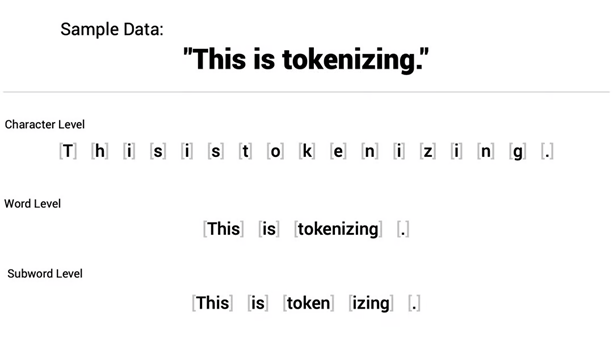

Tokenization:

- It is important to validate that the input text doesn’t exceed the threshold number of tokens supported by the model.

- To handle this, tokenization is introduced. The process of splitting the input text into smaller chunks and process them separately. Also making sure that each chuck is within the allowed limit.

For Example:

from langchain.llms import OpenAI

# Before executing the following code, make sure to have

# your OpenAI key saved in the “OPENAI_API_KEY” environment variable.

# Initialize the LLM llm = OpenAI(model_name="text-davinci-003")

# Define the input text

input_text = "your_long_input_text"

# Determine the maximum number of tokens from documentation

max_tokens = 4097

# Split the input text into chunks based on the max tokens

text_chunks = split_text_into_chunks(input_text, max_tokens)

# Process each chunk separately

results = []

for chunk in text_chunks:

result = llm.process(chunk)

results.append(result)

# Combine the results as needed

final_result = combine_results(results)

Approach:

- Character level

- Word level

- Subword level: this type of encoding increases the flexibility.

Just start with this:

# Import the transformers library

from transformers import pipeline

# Create a text generation pipeline using GPT-2

text_gen = pipeline("text-generation", model="gpt2")

# Generate a title for the blog post based on the topic

topic = "LLM with sample code"

title = text_gen(f"How to {topic}:", max_length=20, num_return_sequences=3)

# Print the generated titles

for t in title:

print(t["generated_text"])

Workflow:

- Splitting the Document into Text Chunks

- To begin, we need to split the document into smaller text chunks. This can be done using various techniques such as sentence tokenization or paragraph separation. The goal is to break down the document into manageable pieces that can be processed individually.

- Representing Text Chunks Using Embeddings

- Once we have our text chunks, we can represent them using embeddings. Embeddings are numerical representations of words or sentences that capture their semantic meaning. There are several pre-trained models available that can generate high-quality embeddings for different languages.

- Storing Text Chunks in VectorDB

- To enable efficient retrieval of text chunks, we can store them in a vector database (VectorDB). VectorDB is a specialized database that is optimized for similarity search based on vector representations. It allows us to quickly find similar text chunks based on their embeddings.

```python

# Import the DocArray and vectordb libraries

from docarray import BaseDoc, DocList

from vectordb import InMemoryExactNNVectorDB

# Define a document schema with text and embedding fields

class Document(BaseDoc):

text: str = ""

embedding: NdArray[768]

# Create a list of documents with some dummy text and embeddings

doc_list = [

Document(text="This is the first document.", embedding=np.random.rand(768)),

Document(text="This is the second document.", embedding=np.random.rand(768)),

Document(text="This is the third document.", embedding=np.random.rand(768)),

]

# Convert the list of documents into a DocList object

doc_list = DocList[Document](doc_list)

# Create an in-memory vector database with the document schema

db = InMemoryExactNNVectorDB[Document](workspace="./workspace")

# Index the documents in the vector database

db.index(inputs=doc_list)

- Retrieval Using Similarity Search

- With our text chunks stored in VectorDB, we can perform similarity search to find the most relevant chunks for a given query. Similarity search algorithms compare the embeddings of the query and the stored chunks to identify the most similar ones. This allows us to retrieve relevant information quickly and accurately.

```python

# Import the vectordb library

from vectordb import InMemoryExactNNVectorDB

# Load the vector database from the workspace

db = InMemoryExactNNVectorDB[Document].load(workspace="./workspace")

# Create a query document with some text and embedding

query = Document(text="This is a query document.", embedding=np.random.rand(768))

# Convert the query document into a DocList object

query = DocList[Document]([query])

# Perform a similarity search using the query document and get the top 10 results

results = db.search(inputs=query, limit=10)

# Print the results

for r in results[0].matches:

print(r.text, r.score)

```

Some possible outputs are:

– This is the second document. 0.87

– This is the third document. 0.76

– This is the first document. 0.65

- Passing Text Chunks into LLM for Answering

- Finally, we can pass the retrieved text chunks into our LLM model for answering specific questions or generating responses. The LLM model uses advanced techniques such as deep learning and attention mechanisms to understand the context and generate human-like text.

```python

# Import the Transformers library

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

# Load an LLM model and tokenizer (you can choose any model from https://huggingface.co/models)

model = AutoModelForSeq2SeqLM.from_pretrained("t5-base")

tokenizer = AutoTokenizer.from_pretrained("t5-base")

# Define a question or a summary prefix

question = "What is this document about?"

prefix = "summarize:"

# Loop through the retrieved documents

for r in results[0].matches:

# Concatenate the question or prefix with the document text

input_text = question + " " + r.text

# Encode the input text into tokens

input_tokens = tokenizer.encode(input_text, return_tensors="pt")

# Generate an output text using the LLM model

output_tokens = model.generate(input_tokens, max_length=50)

# Decode the output tokens into text

output_text = tokenizer.decode(output_tokens[0], skip_special_tokens=True)

# Print the output text

print(output_text)

```

Some possible outputs are:

– This document is about the second document in the list.

– A summary of the third document in the list.

– The first document in the list is a simple introduction.

Reference:

- Large language model – Wikipedia. https://en.wikipedia.org/wiki/Large_language_model.

- What is a Document Store Database?. https://database.guide/what-is-a-document-store-database/.

- How do Document Stores work? – IONOS. https://www.ionos.com/digitalguide/hosting/technical-matters/document-database/.

- vectordb · PyPI. https://pypi.org/project/vectordb/.

- Vector Database | Microsoft Learn. https://learn.microsoft.com/en-us/semantic-kernel/memories/vector-db.

- VectorDB: a Python vector database you just need – no more, no less. https://jina.ai/news/vectordb-a-python-vector-database-you-just-need-no-more-no-less/

- What is Similarity Search? | Pinecone. https://www.pinecone.io/learn/what-is-similarity-search/.

- NLP — Efficient Semantic Similarity Search with Faiss … – Medium. https://medium.com/lett-digital/nlp-efficient-semantic-similarity-search-with-faiss-facebook-ai-similarity-search-and-gpus-274771d0709a.

- Similarity Search for Time-Series Data – GeeksforGeeks. https://www.geeksforgeeks.org/similarity-search-for-time-series-data/.

- StarCoder: A State-of-the-Art LLM for Code – Hugging Face. https://huggingface.co/blog/starcoder.

- Replit – How to train your own Large Language Models. https://blog.replit.com/llm-training.

- A developer’s guide to prompt engineering and LLMs – The GitHub Blog. https://github.blog/2023-07-17-prompt-engineering-guide-generative-ai-llms/.